Axial: https://linktr.ee/axialxyz

Axial partners with great founders and inventors. We invest in early-stage life sciences companies such as Appia Bio, Seranova Bio, Delix Therapeutics, Simcha Therapeutics, among others often when they are no more than an idea. We are fanatical about helping the rare inventor who is compelled to build their own enduring business. If you or someone you know has a great idea or company in life sciences, Axial would be excited to get to know you and possibly invest in your vision and company. We are excited to be in business with you — email us at [email protected]

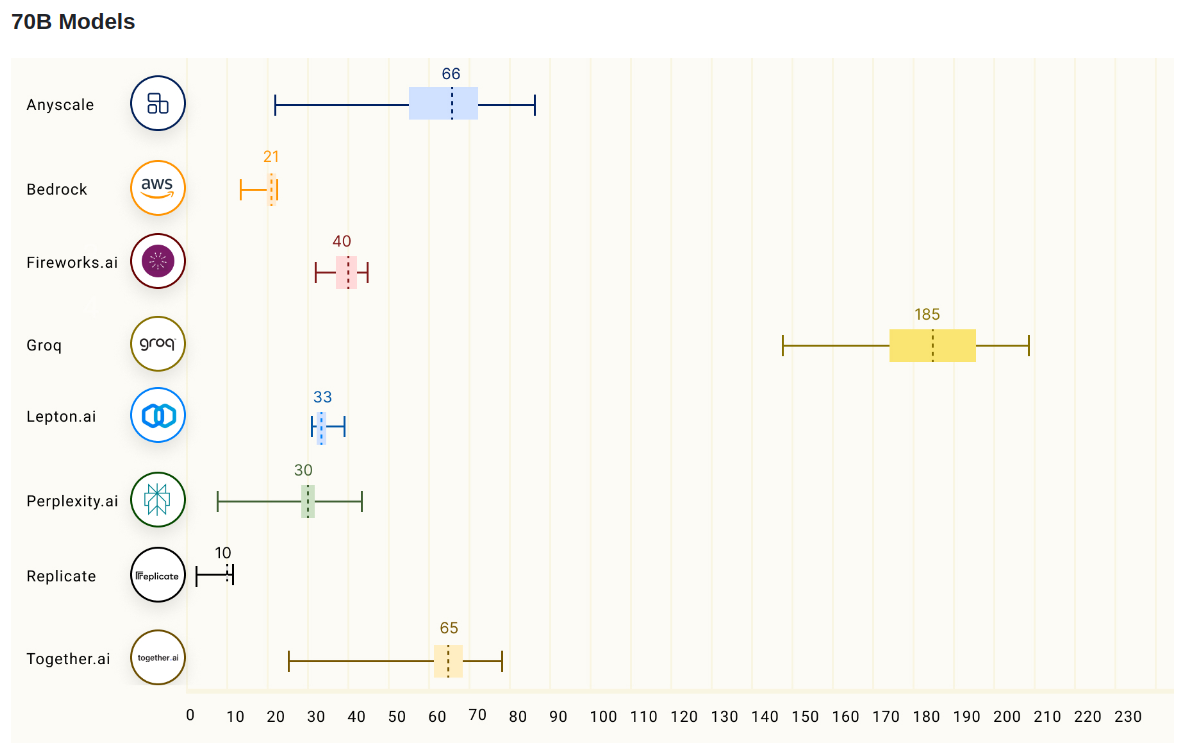

Groq is a chip company focused on pairing memory and compute to speed up inference for AI models. A key product is their Language Processing Unit Inference Engine, manufactured with GlobalFoundries’ 14-nanometer technology, which is a few generations behind the TSMC technology that makes the Nvidia H100. The company’s approach to inference, relying solely on SRAM for memory and splitting large models across networked cards, presents an intriguing proposition.

The core of Groq's inference strategy is its "SRAM-only" architecture, eliminating the need for external memory like GDDR or HBM. This choice has both advantages and disadvantages. On the positive side, SRAM offers significantly faster access times compared to DRAM, which is crucial for achieving high inference speeds. This direct access to data allows Groq's Language Processing Units (LPUs) to work efficiently with the large models, bypassing the bottlenecks often encountered with external memory.

However, the dependence on SRAM also comes with limitations. The significant cost of SRAM, coupled with its lower capacity compared to DRAM, presents a significant obstacle. This constraint necessitates the partitioning of large models across multiple interconnected cards, potentially introducing latency and complexity into the communication process. Their per-token costs might end up actually being high (i.e. 1-2 orders of magnitude) due to these limits. With the long-term plan to keep pricing the same and work to have next-generation chips change this cost structure.

While Groq's approach to inference is promising, its implementation raises further questions. Instead of opting for a more economical and scalable solution like tiling smaller chips on a motherboard, Groq chose to package its LPUs as individual PCIe cards, relying on long-range interconnects. This choice adds considerable cost and complexity, negating some of the potential benefits of their SRAM-only architecture.

The use of large, expensive reticle-sized dies on individual cards, combined with high TDPs and long interconnects, creates several challenges:

Increased Power Consumption: High TDPs and long interconnect wires lead to increased power consumption, potentially eroding the efficiency gains achieved through SRAM.

Cost Barrier: The use of large, expensive dies coupled with PCIe cards contributes to a significant cost barrier, potentially limiting the accessibility of Groq's technology.

Scalability Concerns: The reliance on individual cards and long-range interconnects introduces scalability limitations compared to a tiled approach, where smaller chips can be more easily and affordably scaled for larger models.

The recent Microsoft research paper proposing a tiled approach with smaller, cheaper SRAM-heavy chips on a motherboard presents a stark contrast to Groq's approach. This alternative strategy emphasizes lower power consumption, reduced cost, and improved scalability. By eliminating the need for long-range interconnects and expensive PCIe cards, the Microsoft approach aims to achieve similar performance with greater efficiency and accessibility.

The trajectory of Graphcore, another company focused on SRAM-heavy inference architectures, serves as a cautionary tale for Groq. Despite its promising technology, Graphcore struggled to gain significant traction in the market, ultimately facing financial challenges and falling into relative obscurity. Graphcore's difficulties highlight the potential pitfalls of relying heavily on a specific hardware approach, particularly when facing competition from established players with more established ecosystems.

Groq's SRAM-only approach and its implementation have generated both excitement and skepticism. While its exceptional inference speed is undeniable, the challenges surrounding cost, power consumption, and scalability cannot be ignored. Groq must carefully navigate these challenges to avoid following the path of Graphcore and secure a place in the rapidly evolving landscape of AI inference. In July, Groq put out a demonstration of its chip’s inference speed - exceeding 1,250 tokens per second running Meta’s Llama model.

Ultimately, Groq's success will depend on its ability to achieve a balance between its impressive speed and the practical considerations of cost, power consumption, and scalability. Finding ways to optimize their design, reduce costs, and improve scalability will be critical for them to maintain momentum and compete effectively in the dynamic AI landscape.